In the world of web hosting, things can sometimes go wrong – servers can fail, data can get accidentally deleted, or cyber-attacks can happen. That’s why Backup & Restore processes are absolutely essential. They are your safety net, ensuring that you can recover your website and data when the unexpected occurs.

Backup & Restore is the process of creating copies of your website's data and storing them safely (backup), so that you can bring your website back online and recover any lost information (restore) in case of a problem. It's like having a страховочный план for your digital assets.

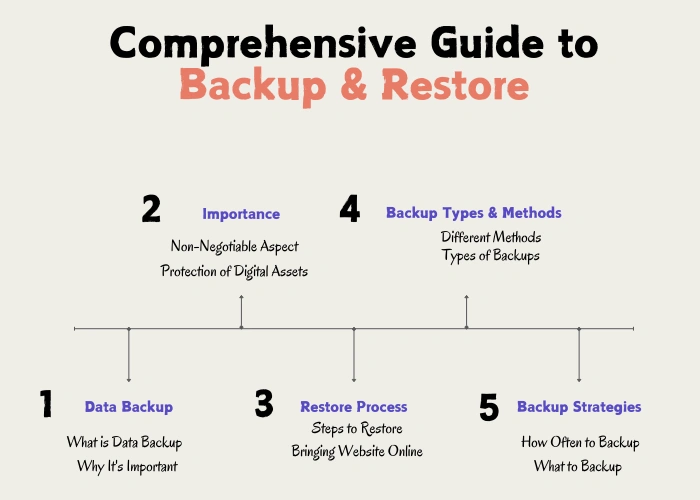

Let's explore the critical aspects of Backup & Restore. We'll cover what data backup really means, why it's non-negotiable, different backup types and methods, the restore process, how to create effective backup strategies and the importance of testing restores. We will also touch upon related concepts like Data Recovery, Disaster Recovery, Redundancy, and Failover. Understanding these concepts will empower you to protect your online presence effectively.

- 1 What is Data Backup?

- 2 Why is Backup Important?

- 3 Backup Methods & Types

- 4 What is Data Restore?

- 5 Backup Strategies - How Often & What to Backup

- 6 Testing Your Restores

- 7 Simulating a Data Loss Scenario

- 8 Example Restore Testing Metrics

- 9 Data Recovery

- 10 Common Data Loss Scenarios

- 11 Data Recovery Tools and Techniques

- 12 Disaster Recovery

- 13 Key Components of Disaster Recovery

- 14 Steps to Create a Disaster Recovery Plan

- 15 Redundancy

- 16 Types of Redundancy in IT

- 17 Benefits of Redundancy

- 18 Failover

- 19 How Failover Works

- 20 Types of Failover Mechanisms

- 21 Benefits of Failover

Content

1. Understanding Backup & Restore: Essential Data Protection Processes

Backup & Restore are two main pillars of data protection. Backup is the proactive step of copying your data. Restore is the reactive step of retrieving that data when needed. Together, they form a complete strategy for data resilience.

1.1. What is Data Backup? Creating a Safety Net for Your Digital Assets

Data backup is the systematic process of creating redundant copies of your organization's critical data. This process involves selecting specific data for protection, choosing a backup method and schedule, and securely storing the backup copies in a separate location, isolated from the primary data source.

Think of data backup as creating a failsafe archive for your valuable digital assets, similar to these real-world analogies:

- Creating Duplicate Keys: Just as you make spare keys to your home or office and store them securely, data backup creates duplicate copies of your digital information, stored safely away from the original.

- Insurance Policy for Data: Data backup acts as an insurance policy for your digital assets. In the event of data loss, corruption, or disaster, backups provide the means to recover and restore operations, minimizing downtime and financial impact.

The goal of data backup is to have a safe copy of everything you need to run your website, ready to be used if something goes wrong with the original data.

Basically, data backup is about being smart and planning ahead for your business to keep it strong. It's not just a tech thing but a must-do strategy that keeps your business running, your important info safe, and makes sure you're steady for the future, even when things online get wild.

According to a recent study, data loss statistics show that data loss incidents are on the rise, emphasizing the growing need for robust backup strategies.

1.2. Why is Backup Important?

Data backup is not just a good idea; it's a necessity for anyone with a website or online presence. Here’s why it’s so critical:

Protection Against Data Loss Scenarios

Data loss can occur due to a multitude of factors, including:

- Hardware Failure: Hard drive crashes, server malfunctions, and storage media failures are common causes of data loss. Backups ensure data is preserved even if physical hardware fails.

- Software Corruption or Errors: Software bugs, application errors, and operating system failures can corrupt data, rendering it inaccessible or unusable. Backups provide a clean copy to revert to.

- Human Error: Accidental deletions, formatting errors, and misconfigurations by employees are frequent causes of data loss. Backups allow for quick recovery from these mistakes.

- Cyberattacks and Malware: Ransomware, viruses, and other malicious attacks can encrypt, delete, or corrupt critical data. Backups are essential for recovering data without paying ransoms and minimizing disruption.

- Natural Disasters and Physical Damage: Fires, floods, earthquakes, and other disasters can destroy physical infrastructure, including servers and storage devices. Offsite backups protect data from location-specific disasters.

Ensuring Business Continuity and Minimizing Downtime

Business continuity hinges on the ability to maintain operations and recover quickly from disruptions. Data backups are the cornerstone of business continuity plans, enabling organizations to:

- Reduce Downtime: Quickly restore systems and data to minimize service interruptions and maintain productivity.

- Maintain Operations: Ensure critical business functions can continue even during or after a disruptive event.

- Protect Revenue and Reputation: Minimize financial losses and reputational damage associated with prolonged downtime and data loss.

Facilitating Data Recovery from Errors and Accidents

Mistakes happen, and data backups provide a safety net for recovering from:

- Accidental Deletions: Easily restore accidentally deleted files, folders, or databases.

- Configuration Errors: Revert to previous configurations if system changes cause instability or performance issues.

- Failed Updates or Migrations: Roll back to a stable state if software updates or data migrations go wrong.

Meeting Legal and Regulatory Compliance Requirements

Many regulations and compliance standards mandate data backup and retention, including:

- Data Protection Regulations: GDPR, HIPAA, PCI DSS, and other regulations require organizations to protect personal and sensitive data, including maintaining backups for data recovery.

- Industry-Specific Standards: Various industries have specific data retention and backup requirements to ensure data integrity and availability for audits and legal purposes.

- Legal and Contractual Obligations: Organizations may have legal or contractual obligations to maintain data backups for specific periods.

Providing Peace of Mind and Data Confidence

Beyond the tangible benefits, data backup provides invaluable peace of mind:

- Data Security Assurance: Knowing backups are in place provides confidence that data is safe and recoverable.

- Focus on Core Business: Reduces anxiety and allows organizations to focus on core business activities without constant worry about data loss.

- Improved Decision Making: Enables bolder decisions and innovations, knowing data risks are mitigated.

1.3. Backup Methods & Types: Choosing the Right Approach for Your Needs

There are different ways to back up your data, and each has its own benefits. Knowing these helps you pick the backup plan that works best for you.

Backup Methods: Strategies for Copying Your Data

Full Backup: The Gold Standard of Data Protection

Full backup is the most comprehensive and straightforward backup method. It involves creating a complete copy of all selected data, regardless of when it was last backed up.

Advantages:

- Simplicity and Ease of Restore: Full backups offer the simplest restore process. Because all data is contained within a single backup set, recovery is fast and straightforward, requiring only the latest full backup to restore all data.

- Complete Data Set: Each full backup is a self-contained, complete copy of your data, providing maximum data redundancy within each backup set.

Disadvantages:

- Time-Consuming Backups: Full backups take the longest time to complete, especially for large datasets, potentially impacting backup windows and system performance.

- High Storage Consumption: Full backups consume the most storage space, as each backup duplicates the entire dataset, leading to higher storage costs over time.

- Resource Intensive: The process of creating full backups can be resource-intensive, placing a significant load on systems during backup operations.

Technical Detail: Full backups operate by reading every block or file selected for backup and writing it to the backup storage medium. This method ensures a complete and independent copy of the data in each backup set. For more technical details, resources like IBM's documentation on backup types can be helpful.

Incremental Backup: Efficiency and Speed for Frequent Backups

Incremental backup is designed for efficiency, focusing on backing up only the data that has changed since the most recent backup of any type (full or incremental). This method significantly reduces backup time and storage space.

Advantages:

- Fast Backup Speed: Incremental backups are the fastest backup type after the initial full backup, as they only copy changed data, minimizing backup windows.

- Storage Efficiency: They consume the least storage space compared to full and differential backups, leading to lower storage costs and efficient use of backup media.

- Reduced Bandwidth Usage: For offsite backups, incremental backups minimize the amount of data transferred over the network, reducing bandwidth consumption.

Disadvantages:

- Complex and Time-Consuming Restores: Restore processes are more complex and time-consuming. Recovery requires the last full backup and all subsequent incremental backups in chronological order, making restores more intricate and potentially slower.

- Increased Restore Failure Risk: The dependency on a chain of backups means that if any incremental backup in the chain is corrupted or missing, the entire restore process can be compromised.

Technical Detail: Incremental backups track changes at the file or block level. They typically use file system attributes like archive bits and modification timestamps to identify changed files. Changed Block Tracking (CBT) is used in virtualized environments to track changed blocks within virtual disks, further enhancing efficiency. More information on CBT can be found in VMware's documentation on Changed Block Tracking (CBT).

Differential Backup: Balancing Speed and Restore Simplicity

Differential backup offers a compromise between full and incremental backups. It backs up all the data that has changed since the last full backup. This means that each differential backup contains the cumulative changes since the last full backup, but not changes from previous differential backups.

Advantages:

- Faster Restores than Incremental Backups: Restores are faster and simpler than incremental backups because only two backup sets are needed: the last full backup and the latest differential backup.

- Faster Backups than Full Backups (After Initial Full): Differential backups are quicker than full backups after the initial full backup, as they only copy changed data.

- Improved Data Redundancy Compared to Incrementals: Each differential backup is less dependent on previous backups compared to incremental backups, reducing the risk of restore failures due to a corrupted backup chain.

Disadvantages:

- Larger Backups and More Storage than Incrementals: Differential backups are larger and consume more storage space than incremental backups because they include all changes since the last full backup in each differential set.

- Slower Backups and Restores than Incrementals: While faster than full backups for subsequent backups and restores, differential backups are slower than incremental backups for both backup and restore operations.

Technical Detail: Differential backups, unlike incremental backups, always refer back to the last full backup as the baseline. Each differential backup captures all changes made since that full backup. This approach simplifies the restore process, requiring only the last full backup and the most recent differential set. For a deeper technical understanding, resources like Veeam's blog on backup methods provide excellent insights.

Backup Types (by Location): Choosing the Right Storage Destination

Local Backup: Speed and Convenience for Quick Recovery

Local backup involves storing backup data on storage devices that are physically located in the same site as the data being backed up. This typically includes:

- External Hard Drives: Portable and easy to use for small to medium-sized backups.

- Network Attached Storage (NAS): Centralized storage devices on the local network, offering shared access and scalability.

- Dedicated Backup Servers On-Site: Servers located within the organization's premises, configured specifically for backup storage.

- Separate Partitions or Volumes: Creating distinct partitions or volumes on the same server for storing backups, although less secure against hardware failures affecting the entire server.

Advantages:

- Fastest Backup and Restore Speeds: Local backups offer the fastest data transfer rates, as they operate within the local network, enabling quick backup and restore operations. This minimizes downtime and ensures rapid recovery.

- Simple Implementation and Access: Setting up local backups is generally straightforward, and accessing backup data is quick and easy, especially for routine restores and data retrieval.

- Cost-Effective for Initial Setup: Local backup solutions can be more cost-effective for initial setup, as they may not involve recurring subscription fees associated with cloud services.

Disadvantages:

- Vulnerability to Site-Specific Disasters: The most significant drawback of local backups is their vulnerability to disasters that affect the primary site. If a fire, flood, or other disaster damages the primary location, both the original data and the local backups can be lost, rendering them ineffective for disaster recovery.

- Limited Scalability and Redundancy: Local backup solutions may have limited scalability compared to cloud options, and achieving high levels of data redundancy and geographic diversity can be complex and costly.

- Higher Management Overhead: Organizations are responsible for managing and maintaining local backup infrastructure, including hardware, software, and media, which can require dedicated IT resources.

Optimal Use Case: Local backups are best suited for scenarios requiring rapid recovery from minor data loss events, such as accidental file deletions, software errors, or localized hardware failures. They are ideal for organizations that prioritize speed and convenience for day-to-day restores and have less stringent disaster recovery requirements. For further insights, consider resources like TechTarget's definition of local backup.

Offsite Backup: Disaster Protection and Data Resilience

Offsite backup involves storing backup data in a geographically separate location from the primary data center or office. This strategy is crucial for disaster recovery and business continuity, protecting data from site-specific disasters. Common offsite backup locations include:

- Secondary Data Centers: Dedicated facilities in different geographic locations owned or leased by the organization.

- Managed Service Providers (MSPs): Third-party providers that offer offsite backup services and infrastructure.

- Colocation Facilities: Data centers where organizations rent space and infrastructure to house their backup equipment.

Advantages:

- Protection Against Site-Wide Disasters: Offsite backups provide critical protection against location-specific disasters such as fires, floods, earthquakes, and regional power outages. In such events, offsite backups ensure data remains safe and recoverable, enabling business continuity.

- Enhanced Data Security and Compliance: Storing backups offsite, especially in secure data centers, can enhance data security and help meet regulatory compliance requirements for data protection and geographic diversity.

- Improved Business Continuity and Disaster Recovery: Offsite backups are a cornerstone of disaster recovery planning, ensuring that organizations can restore critical data and systems even if the primary site is completely compromised.

Disadvantages:

- Slower Backup and Restore Speeds: Compared to local backups, offsite backups typically involve slower data transfer rates, as they rely on network connections over longer distances. This can increase backup windows and restore times.

- Higher Bandwidth Requirements: Transferring large volumes of data offsite requires significant network bandwidth, which can be costly and may impact network performance.

- Increased Complexity and Management: Managing offsite backups can be more complex, requiring coordination with secondary data centers or MSPs, and potentially involving more intricate logistics and security protocols.

Optimal Use Case: Offsite backups are essential for comprehensive disaster recovery and business continuity strategies. They are ideal for organizations that need to protect against site-wide disasters, meet stringent compliance requirements, and ensure data availability even in catastrophic scenarios. Resources like Veritas' documentation on offsite backups offer valuable insights.

Cloud Backup: Scalability, Automation, and Cost Efficiency

Cloud backup, also known as online backup, is a form of offsite backup where data is stored in a cloud service provider's data centers. Cloud backup solutions offer numerous advantages, including scalability, automation, and cost efficiency. Key aspects of cloud backup include:

- Cloud Storage Infrastructure: Data is stored on the provider's infrastructure, which typically consists of geographically distributed data centers with robust redundancy and security measures.

- Managed Services and Automation: Cloud backup services are often fully managed, automating backup scheduling, execution, monitoring, and reporting.

- Scalability and Flexibility: Cloud backup offers virtually unlimited scalability, allowing organizations to easily adjust storage capacity as data volumes grow.

- Accessibility and Disaster Recovery: Cloud backups are accessible from anywhere with an internet connection, facilitating remote data recovery and ensuring business continuity in case of disasters.

Advantages:

- Scalability and Flexibility: Cloud backup provides unparalleled scalability, allowing organizations to scale storage capacity up or down as needed, paying only for the storage consumed. This flexibility is ideal for businesses with fluctuating data volumes.

- Automation and Managed Services: Cloud backup services automate most backup operations, reducing administrative overhead and freeing up IT staff for other critical tasks. Managed services ensure backups are performed reliably and consistently.

- Cost Efficiency: Cloud backup can be more cost-effective than traditional backup methods, especially for small and medium-sized businesses, as it eliminates the need for upfront investments in hardware and infrastructure. The Azure Backup pricing model is an example of pay-as-you-go efficiency.

- Offsite by Default and Disaster Recovery: Cloud backups inherently provide offsite protection, safeguarding data from site-specific disasters. Cloud providers offer robust redundancy and disaster recovery capabilities, ensuring high data availability and durability.

- Accessibility and Ease of Management: Cloud backups are easily accessible from anywhere with an internet connection, simplifying data recovery and management. Centralized management consoles provide intuitive interfaces for monitoring and managing backups.

Disadvantages:

- Internet Dependency and Bandwidth Limitations: Cloud backup and restore speeds are heavily dependent on internet bandwidth and reliability. Organizations with limited bandwidth or unreliable internet connections may experience slower backup and restore performance.

- Data Security and Privacy Concerns: Entrusting data to a third-party cloud provider raises data security and privacy concerns. Organizations must carefully evaluate the provider's security measures, compliance certifications, and data handling policies to ensure data is protected. AWS Security and compliance features are examples of cloud provider security investments.

- Vendor Lock-In: Adopting a specific cloud backup provider can lead to vendor lock-in, making it challenging to switch providers or migrate data in the future.

- Recovery Time Objectives (RTOs): While cloud backups offer excellent disaster recovery capabilities, restore times, especially for large datasets, can be longer than local backups, potentially impacting RTOs.

Optimal Use Case: Cloud backup is ideal for organizations seeking scalable, automated, and cost-effective backup solutions with robust offsite protection. It is particularly well-suited for businesses with distributed operations, remote workforces, and those prioritizing disaster recovery and business continuity. Cloud backup is also an excellent option for organizations looking to reduce IT management overhead and leverage the scalability and reliability of cloud infrastructure. For more detailed information, Backblaze's guide to cloud backup services provides a comprehensive overview.

1.4. Backup Strategies: Defining What, When, and Where to Backup

Developing a robust backup strategy is essential for ensuring effective data protection. A well-defined backup strategy outlines what data to backup, how frequently to back it up, and where to store the backup copies. Key considerations for formulating a comprehensive backup strategy include:

Defining What Data to Backup: Identifying Critical Assets

The first step in a backup strategy is to identify the critical data assets that require protection. This involves categorizing data based on its importance, sensitivity, and business impact. Key data categories to consider for backup include:

- Website Files and Content: All files, code, images, themes, and content that constitute your website. This includes HTML files, CSS, JavaScript, media files, and CMS content.

- Databases: Databases that store dynamic content, user data, application settings, and transactional records. Examples include MySQL, Microsoft SQL Server, Oracle, and PostgreSQL databases.

- Emails and Communication Data: Email data, including mailboxes, email archives, and communication logs, especially for business communications and compliance purposes.

- Server and System Configurations: Operating system settings, application configurations, network configurations, and server-specific settings that define how your servers and systems operate.

- Virtual Machines (VMs) and Containers: Backups of virtual machines and containers, including VM images, container configurations, and associated data volumes.

- Endpoint Devices (Laptops, Desktops): Data on employee laptops and desktops, especially for remote workers or organizations with distributed workforces.

- SSL/TLS Certificates and Security Keys: Digital certificates and encryption keys used for secure website access (HTTPS) and data encryption.

Determining Backup Frequency: Aligning with Data Change Rate and RPO

How often you backup your data depends on the rate of data change and your organization's Recovery Point Objective (RPO) - the maximum acceptable data loss in case of an incident. Common backup frequencies include:

- Daily Backups: Performing backups daily is a common practice for organizations with frequently updated websites, dynamic data, and critical business operations.

- Weekly Backups: Weekly backups may be sufficient for websites and data that change less frequently.

- Hourly Backups: For highly dynamic data and applications that require minimal data loss, hourly backups may be necessary.

- Real-time Backups/Continuous Data Protection (CDP): For mission-critical applications and data that demand near-zero data loss tolerance, real-time backups or Continuous Data Protection (CDP) are employed.

- Transaction Log Backups (Databases): For databases, transaction log backups are performed frequently (e.g., every few minutes) in addition to full or differential backups.

Choosing Backup Destinations: Implementing the 3-2-1 Rule

Selecting appropriate backup destinations is crucial for ensuring data resilience and recoverability. A key strategy for backup destinations is the 3-2-1 rule, which recommends having:

- 3 Copies of Your Data: Maintain at least three copies of your data: the primary production data and two backup copies.

- 2 Different Storage Media: Store backup copies on at least two different types of storage media to protect against media-specific failures.

- 1 Offsite Copy: Keep at least one backup copy in an offsite location that is geographically separated from the primary data center.

Implementing Backup Automation and Management

Automation is crucial for ensuring consistent and reliable backups. Key automation and management practices include:

- Automate Backup Schedules: Utilize backup software and tools to automate backup scheduling, ensuring backups are performed regularly without manual intervention.

- Centralized Backup Management: Implement centralized backup management platforms to monitor, manage, and report on backup operations across the organization.

- Use Backup Software and Tools: Leverage specialized backup software and tools that offer features such as scheduling and automation, compression and deduplication, encryption, and centralized monitoring and reporting.

Defining Backup Retention Policies

Establishing clear backup retention policies is essential for managing backup storage, meeting compliance requirements, and optimizing backup infrastructure. Key aspects of retention policies include:

- Define Retention Periods: Determine how long to retain backup copies based on data criticality, regulatory requirements, and storage capacity.

- Implement a Backup Rotation Scheme: Use a backup rotation scheme, such as Grandfather-Father-Son (GFS) or Tower of Hanoi, to manage backup sets efficiently and adhere to retention policies.

- Archival and Long-Term Retention: Define policies for archiving and long-term retention of backups for compliance, legal, or historical purposes.

- Regular Review and Adjustment: Periodically review and adjust backup retention policies to align with changing business needs, data growth, and regulatory requirements.

A well-structured backup strategy is tailored to your organization's unique data landscape, business requirements, and risk appetite. It is a dynamic plan that should be regularly reviewed, tested, and updated to ensure it remains effective in protecting your valuable data assets.

1.5 Best Practices for Data Backup: Ensuring Reliability and Efficiency

Implementing data backup effectively requires adherence to industry best practices to guarantee backup reliability, operational efficiency, data security, and recoverability. These best practices provide a framework for establishing a robust and resilient data protection system.

To maximize the effectiveness of your data backup strategy and minimize data loss risks, consider these essential best practices:

1. Implement the 3-2-1 Backup Rule: The Foundation of Data Redundancy

The 3-2-1 rule is a cornerstone of robust backup strategies, providing a simple yet effective framework for data redundancy and disaster protection. It mandates maintaining:

- Three Copies of Your Data: Always have at least three copies of your data:

- Primary Data: Your live, production data that users and applications access regularly.

- Primary Backup (Local): A backup copy stored locally for fast restores and operational recovery.

- Secondary Backup (Offsite): An offsite backup copy for disaster recovery and protection against site-specific events.

- Two Different Storage Media: Utilize at least two different types of storage media for your backup copies to mitigate media-specific failure risks. Diversify storage media by combining:

- Disk and Tape: Disk-based backups for speed and tape for archival and long-term storage.

- Local and Cloud: Local storage for rapid recovery and cloud for offsite protection and scalability.

- Different Storage Vendors/Technologies: Mix storage vendors or technologies to avoid technology-specific vulnerabilities or failures affecting all backup copies.

- One Offsite Copy: Ensure at least one backup copy is stored offsite, geographically separated from your primary data center. Offsite backups are crucial for disaster recovery and business continuity, protecting against:

- Natural Disasters: Fires, floods, earthquakes, and other location-specific disasters.

- Regional Outages: Power outages, network disruptions, and regional infrastructure failures.

- Site-Wide Incidents: Facility-level incidents that can compromise both primary and local backup data.

By adhering to the 3-2-1 rule, organizations significantly enhance their data resilience, improve recovery options, and minimize the risk of irreversible data loss. Resources like US-CERT's guide to data backup recommend implementing the 3-2-1 rule as a fundamental best practice.

2. Automate Backups and Scheduling: Ensuring Consistency and Reliability

Backup automation is essential for consistent and reliable data protection. Implement automation for:

- Scheduled Backups: Automate backup schedules to run regularly without manual intervention. Use backup software or scripts to schedule backups daily, hourly, or at other intervals based on your RPO requirements.

- Unattended Backups: Configure backups to run unattended, minimizing the need for manual oversight and reducing the risk of missed backups due to human error.

- Centralized Management: Utilize centralized backup management tools to automate, monitor, and manage backup jobs across your infrastructure. Centralized management simplifies administration and improves backup consistency.

- Alerting and Notifications: Set up automated alerts and notifications to promptly identify backup failures, errors, or missed backups. Proactive alerting ensures timely issue resolution and maintains backup reliability.

Automation ensures backups are performed consistently and reliably, reducing the risk of human error and ensuring data is protected according to defined schedules and policies.

3. Choose the Right Backup Types and Methods: Tailoring to Data Characteristics and RPO/RTO

Select backup types and methods that align with your data characteristics, RTO, RPO, and business requirements. Consider these factors:

- Full Backups for Simplicity and Baseline Protection: Use full backups periodically to establish a complete baseline copy of your data. Full backups simplify restores and provide a comprehensive recovery point.

- Incremental or Differential Backups for Efficiency: Implement incremental or differential backups for frequent backups between full backups to reduce backup time, storage consumption, and bandwidth usage. Choose between incremental and differential backups based on your restore speed and storage efficiency priorities.

- Application-Aware Backups for Databases and Applications: Utilize application-aware backup solutions for databases (e.g., SQL Server, Oracle, MySQL) and business-critical applications. Application-aware backups ensure transactional consistency and reliable recovery for complex applications.

- Image-Based Backups for System Recovery: Employ image-based backups for operating systems, virtual machines, and servers to enable rapid system recovery and bare-metal restores. Image-based backups capture entire system volumes, facilitating quick recovery from system failures.

Selecting the right backup types and methods ensures backups are efficient, meet recovery objectives, and provide appropriate levels of protection for different data types and systems.

4. Regularly Test Restores: Validating Recovery Readiness and RTO

Regular restore testing is paramount to validate backup integrity, recovery procedures, and RTOs. Implement a routine restore testing schedule:

- Schedule Regular Restore Tests: Conduct restore tests regularly, such as monthly or quarterly, to ensure backups are restorable and recovery procedures are effective. Routine testing identifies issues proactively.

- Simulate Data Loss Scenarios: Simulate realistic data loss scenarios during testing to validate recovery procedures under different failure conditions. Test restores from different backup types and locations.

- Measure RTOs and Track Performance Metrics: Measure RTOs during restore tests and track performance metrics to assess recovery speed and identify areas for improvement. Document RTO measurements and track trends over time.

- Document Test Results and Refine Procedures: Document all test results, findings, and lessons learned. Use test outcomes to refine backup and restore procedures, update documentation, and improve team preparedness.

Regular restore testing ensures that backups are reliable, recovery procedures are effective, and RTO targets can be achieved, providing confidence in data recovery readiness.

5. Monitor Backups and Verify Success: Ensuring Backup Operations Are Effective

Proactive backup monitoring and verification are essential for ensuring backup operations are successful and data is consistently protected. Implement monitoring and verification practices:

- Centralized Monitoring Dashboard: Utilize a centralized backup monitoring dashboard to track backup job status, success rates, errors, and storage utilization. Centralized dashboards provide real-time visibility into backup operations.

- Automated Backup Verification: Implement automated backup verification processes to confirm backup job success and data integrity. Backup software often includes built-in verification tools.

- Regular Log Reviews: Periodically review backup logs for errors, warnings, or anomalies that may indicate backup issues. Log analysis helps identify and resolve backup problems proactively.

- Proactive Alerting and Notifications: Set up proactive alerts and notifications to promptly identify backup failures, errors, missed backups, or storage threshold breaches. Prompt alerts enable timely corrective actions.

- Reporting and Analysis: Generate regular backup reports to track backup performance, success rates, storage trends, and RTO metrics. Reporting provides insights for capacity planning and process optimization.

Continuous monitoring and verification ensure that backup operations are functioning as expected, data is being protected effectively, and potential issues are identified and resolved promptly.

6. Secure Backup Data: Protecting Data Confidentiality and Integrity

Security is paramount for backup data, as backups often contain sensitive and critical information. Implement robust security measures to protect backup data:

- Encryption at Rest and in Transit: Encrypt backup data both at rest (when stored) and in transit (during transfer) to protect data confidentiality. Use strong encryption algorithms and key management practices. Encryption Key Management is crucial for secure encryption practices.

- Access Controls and Authentication: Implement strict access controls and multi-factor authentication (MFA) for backup systems, storage, and management interfaces. Limit access to authorized personnel only.

- Secure Storage Locations: Store backup media and storage devices in secure locations with physical access controls and environmental protections. Secure physical storage prevents unauthorized access and environmental damage.

- Regular Security Audits and Vulnerability Assessments: Conduct periodic security audits and vulnerability assessments of backup infrastructure to identify and remediate security weaknesses. Regular audits ensure ongoing security effectiveness.

- Data Isolation and Segregation: Isolate backup networks, storage, and systems from production environments to prevent lateral movement of threats and unauthorized access. Network segmentation enhances backup security.

Securing backup data protects sensitive information from unauthorized access, breaches, and cyber threats, maintaining data confidentiality and integrity.

7. Optimize Backup Storage and Retention: Managing Costs and Compliance

Efficiently managing backup storage and retention is crucial for cost optimization, compliance adherence, and operational efficiency. Implement storage and retention best practices:

- Data Deduplication and Compression: Utilize data deduplication and compression technologies to reduce backup storage footprint, minimize storage costs, and optimize bandwidth usage:

- Data Deduplication: Eliminates redundant data blocks, significantly reducing storage requirements.

- Compression: Reduces data size, further optimizing storage utilization and transfer efficiency.

- Tiered Storage for Backup Data: Implement tiered storage strategies to optimize storage costs based on backup frequency, retention requirements, and recovery needs:

- Flash or SSD Storage: For high-performance, low-latency restores and frequently accessed backups.

- Disk-based Storage (HDD): For primary backups, daily backups, and medium-term retention.

- Tape Storage or Cloud Archive Storage: For long-term archiving, compliance retention, and less frequently accessed backups.

- Backup Retention Policies and Lifecycle Management: Define and enforce clear backup retention policies to manage backup lifecycle, optimize storage utilization, and meet compliance requirements:

- Automated Retention Management: Automate the deletion or archiving of backups according to defined policies, ensuring efficient storage use.

- Retention Periods: Align retention periods with RTO, RPO, and compliance mandates (e.g., GDPR, HIPAA).

- Capacity Planning and Storage Monitoring: Regularly monitor backup storage capacity, track storage trends, and plan for future storage needs:

- Storage Capacity Alerts: Set up alerts to notify when storage thresholds are approaching limits.

- Proactive Capacity Planning: Ensure sufficient storage resources are available to meet backup requirements without disruptions.

Optimizing backup storage and retention reduces storage costs, improves storage efficiency, and ensures compliance with data retention regulations and organizational policies.

8. Document Backup Procedures and DRP: Ensuring Clarity and Preparedness

Comprehensive documentation is essential for effective backup and recovery operations. Document key aspects of your backup strategy:

- Detailed Backup Procedures: Create step-by-step documentation for all backup and restore procedures, including:

- Backup Scheduling and Types: Clearly define backup schedules, types, and methods.

- Backup Locations and Storage Media: Specify where backups are stored and the media used.

- Restore Procedures for Different Scenarios: Provide detailed steps for restoring data in various scenarios (e.g., file-level recovery, full system restore).

- Troubleshooting Steps and Error Handling: Include troubleshooting guides for common issues during backup and restore processes.

- Disaster Recovery Plan (DRP) Integration: Integrate backup procedures and documentation into your organization's Disaster Recovery Plan (DRP):

- Data Recovery Steps: Outline detailed steps for recovering data and systems after a disaster.

- System Restoration Protocols: Provide instructions for restoring servers, applications, and configurations.

- Business Resumption Plans: Ensure the DRP includes plans for resuming critical business operations post-recovery.

- Regular Review and Updates: Review and update backup procedures and documentation regularly to reflect changes in infrastructure, backup technologies, and business requirements:

- Infrastructure Changes: Update documentation to include new systems, storage solutions, or backup tools.

- Policy Adjustments: Reflect changes in compliance requirements, retention policies, or organizational priorities.

- Training and Knowledge Sharing: Train IT staff and relevant personnel on backup procedures, DRP protocols, and data recovery best practices:

- Hands-On Training: Conduct practical exercises to ensure the team is proficient in executing backup and restore operations.

- Knowledge Accessibility: Ensure documentation is current, accessible, and easy to understand for all stakeholders.

Well-maintained documentation ensures clarity, consistency, and preparedness for backup and recovery operations, reducing errors and improving RTOs.

9. Regularly Review and Update Backup Strategy: Adapting to Change and Evolving Threats

Data backup is not a static process. Regularly review and update your backup strategy to adapt to changing business needs, data growth, technology advancements, and evolving threat landscapes:

- Annual Backup Strategy Review: Conduct an annual review of your overall backup strategy to assess its effectiveness, identify gaps, and align it with current business objectives and risk tolerance:

- Effectiveness Assessment: Evaluate whether the backup strategy meets recovery objectives and minimizes risks.

- Gap Analysis: Identify areas for improvement or additional safeguards.

- Infrastructure and Technology Updates: Update your backup strategy to incorporate new infrastructure components, technology upgrades, and changes in data volumes or data types:

- New Technologies: Leverage advancements such as cloud storage, AI-driven backup solutions, or advanced deduplication techniques.

- Scalability Adjustments: Adapt backup methods and storage to accommodate growing data volumes.

- Threat Landscape Assessment: Evaluate the evolving threat landscape, including ransomware, cyber threats, and disaster risks, and adjust your backup strategy to mitigate emerging risks:

- Enhanced Security Measures: Strengthen encryption, access controls, and network segmentation to protect backups.

- Disaster Recovery Enhancements: Improve offsite backup capabilities and geographic redundancy to address new disaster risks.

- Compliance and Regulatory Changes: Review and update your backup strategy to comply with new or updated regulatory requirements, data privacy laws, and industry standards:

- Regulatory Alignment: Ensure backup policies and procedures align with current compliance mandates (e.g., GDPR, HIPAA).

- Audit Readiness: Maintain documentation and processes that demonstrate compliance during audits.

- Feedback from Restore Tests and Incidents: Incorporate lessons learned from restore tests, data loss incidents, and recovery exercises into your backup strategy:

- Refined Procedures: Use feedback to improve backup and restore processes.

- Improved RTOs: Focus on reducing recovery times and enhancing overall data recovery preparedness.

Regularly reviewing and updating your backup strategy ensures it remains effective, relevant, and aligned with your organization's evolving needs and the dynamic data protection landscape.

10. Educate Users and Promote Data Backup Awareness: Fostering a Data Protection Culture

Building a data protection culture within your organization is crucial for ensuring data backup effectiveness. Promote data backup awareness and educate users on their roles and responsibilities:

- User Training on Data Backup Importance: Conduct user training sessions to educate employees about the importance of data backup, data loss risks, and their role in data protection. Emphasize the shared responsibility for data security.

- Promote Data Backup Best Practices: Communicate data backup best practices to users, such as:

- Saving Files to Network Shares or Cloud Storage: Encourage users to save critical files to network shares or cloud storage that are regularly backed up, rather than local drives that may not be protected.

- Avoiding Local Data Storage for Critical Data: Discourage storing mission-critical data solely on local devices without backup.

- Reporting Data Loss Incidents Promptly: Train users to report any data loss incidents, accidental deletions, or potential data security breaches immediately to IT.

- Regular Security Awareness Campaigns: Incorporate data backup awareness into broader security awareness campaigns to reinforce data protection culture and user responsibility.

- Lead by Example and Foster a Proactive Approach: IT leadership and management should champion data backup best practices and promote a proactive approach to data protection throughout the organization.

Educating users and fostering data backup awareness creates a security-conscious culture, reduces human-error related data loss, and enhances overall data protection effectiveness.

By diligently implementing these best practices, organizations can establish a robust, reliable, and efficient data backup strategy that minimizes data loss risks, ensures business continuity, and provides peace of mind in an increasingly data-dependent world. Continuous vigilance, regular testing, and ongoing refinement are key to maintaining effective data protection.

1.6 Comparing Backup Types

2.1 What is Data Restore? Recovering Your Data and Systems

Data restore is the process of retrieving backed-up data and using it to reinstate lost, corrupted, or inaccessible original data. It is the critical step in the data protection lifecycle that enables organizations to recover from data loss incidents and resume normal operations. Effective data restore processes are essential for minimizing downtime and data loss.

Data restore is akin to using a recovery blueprint to rebuild your digital environment after a data loss event. Consider these analogies:

- Rebuilding from Blueprints: If a building is damaged or destroyed, blueprints are used to reconstruct it. Similarly, data backups serve as blueprints to rebuild your digital systems and data after a loss.

- Restoring a Masterpiece: Imagine a valuable painting damaged in an accident. Data restore is like a skilled restoration artist carefully piecing together and restoring the masterpiece to its original condition using archived fragments.

The Data Restore Process Involves Several Key Steps

Each step is critical to ensuring successful data recovery:

- Identifying the Data to Restore: Determine the specific data that needs to be recovered. This may range from individual files or folders to entire systems or databases.

- Selecting the Appropriate Backup Set: Choose the most relevant backup set for the restore operation. Typically, this is the most recent, uncorrupted backup that contains the required data and precedes the data loss event.

- Initiating the Restore Process: Start the restore operation using the backup software, control panel, or cloud service interface. This involves specifying the backup set, destination for restored data, and restore options.

- Performing the Data Restore: Execute the restore process, allowing the backup system to retrieve the selected data from the backup storage and copy it to the designated recovery location.

- Verifying Data Integrity and Completeness: After the restore is complete, rigorously verify that the recovered data is intact, complete, and consistent. This includes checking file integrity, database consistency, and application functionality.

- Testing Restored Systems and Applications: Thoroughly test restored systems and applications to ensure they are functioning correctly and meeting operational requirements.

The complexity and duration of the restore process depend on factors such as the backup method used, the amount of data being restored, the performance of the backup storage and network infrastructure, and the granularity of the restore operation. Minimizing Recovery Time Objective (RTO) is a primary goal of effective restore processes.

2.2 Restore Granularity: Tailoring Recovery to Specific Needs

A robust data recovery strategy requires precise alignment with the scope and urgency of potential incidents. Restore granularity ensures organizations can address both isolated data losses and system-wide failures efficiently. This section outlines four core recovery methods, their applications, and operational considerations.

1. Full System Restore

Definition:

Rebuilds an entire system environment, including operating systems, applications, configurations, and stored data, to a predefined state.

Operational Context:

Use Cases:

- Total system failure (e.g., server hardware malfunction).

- Recovery from ransomware encrypting all system files.

- Widespread corruption due to software updates or configuration errors.

Implementation:

- Relies on a complete system image captured during backups.

- Requires downtime proportional to data volume and infrastructure complexity.

Benefits:

- Restores all components to a functional state, eliminating dependencies on fragmented repairs.

- Guarantees operational consistency post-recovery.

Limitations:

- Extended downtime during large-scale restoration.

- Potential overkill for minor incidents.

2. Granular Restore (Files, Folders, Databases)

Definition:

Targeted recovery of individual files, directories, or database elements (e.g., tables, records) without rebuilding the entire system.

Operational Context:

Use Cases:

- Accidental deletion of critical documents or user data.

- Corruption within specific database tables or application files.

- Partial data loss from user error or isolated malware.

Implementation:

- Requires metadata indexing to locate and extract specific data.

- Often integrated with searchable backup catalogs.

Benefits:

- Near-instant recovery for mission-critical data.

- Minimal disruption to unaffected systems or workflows.

Limitations:

- Ineffective for systemic issues (e.g., OS corruption).

- Requires detailed backup structuring to enable item-level access.

3. Bare-Metal Restore

Definition:

Reconstructs a system from a backup image onto new or replacement hardware, bypassing reliance on existing infrastructure.

Operational Context:

Use Cases:

- Total hardware failure with no salvageable components.

- Data center disasters (fire, flood, physical damage).

- Legacy system migration to modern hardware.

Implementation:

- Dependent on hardware-agnostic backup images.

- Often paired with automated driver detection for new hardware.

Benefits:

- Eliminates hardware dependency for recovery.

- Streamlines migration to updated infrastructure.

Limitations:

- Longer restoration time compared to disk-based recovery.

- Requires frequent image updates to reflect system changes.

4. Point-in-Time Restore

Definition:

Recovers data to a specific timestamp, leveraging incremental or transactional backups to revert to a known-good state.

Operational Context:

Use Cases:

- Undetected data corruption discovered after multiple backup cycles.

- Rollback of faulty software updates or configuration changes.

- Recovery from ransomware with a known infection window.

Implementation:

- Relies on continuous or frequent backup snapshots.

- Requires transactional logging (e.g., database transaction logs).

Benefits:

- Precision in avoiding reintroduction of corrupted data.

- Minimizes data loss between last backup and incident time.

Limitations:

- Storage-intensive for environments with high transaction volumes.

- Complex configuration for multi-system consistency.

Strategic Considerations

- Risk Alignment: Match restore methods to incident severity. Use granular recovery for isolated issues; escalate to full system or bare-metal for catastrophic failures.

- Testing Protocols: Validate each method quarterly. Simulate ransomware attacks, hardware failures, and accidental deletions to confirm recovery timelines.

- Automation: Integrate recovery workflows with IT service management (ITSM) tools to reduce manual intervention.

- Documentation: Maintain clear runbooks specifying when and how to deploy each restore type.

2.3 Testing Your Restores: Validating Data Recovery Readiness

Data backups are only valuable if they can be successfully restored when needed. Regularly testing your restore processes is a critical, often overlooked, component of a robust backup strategy. Restore testing validates the integrity of backups, verifies recovery procedures, and ensures that Recovery Time Objectives (RTOs) can be met.

The Importance of Restore Testing

The importance of restore testing cannot be overstated. Here’s why it is essential:

- Verifying Backup Integrity and Reliability: Restore testing is the ultimate validation of your backup process. It ensures that:

- Backups Are Not Corrupted: Regular tests confirm that backup data is not corrupted during the backup process or storage. Data corruption can render backups unusable, making recovery impossible.

- Data Is Restorable: Testing verifies that backups can be successfully restored using the defined procedures and tools. A backup that cannot be restored is effectively useless.

- Data Completeness and Consistency: Restore tests ensure that backups contain all the expected data and that the restored data is consistent and usable. Incomplete or inconsistent backups can lead to data loss or application failures after recovery.

- Data Integrity Checks: Implement checksums and hash verification during backup and restore processes to proactively detect and prevent data corruption. Checksums and hash values ensure data integrity throughout the backup lifecycle.

- Validating Restore Procedures and RTOs: Restore testing is crucial for validating your recovery procedures and assessing RTOs:

- Procedure Validation: Testing confirms that documented restore procedures are accurate, complete, and effective. It identifies any gaps or errors in the procedures that need to be addressed.

- Process Familiarization: Regular testing familiarizes IT staff with the restore process, ensuring they are prepared to execute recoveries efficiently during real data loss incidents. Practice makes the recovery process smoother and faster.

- RTO Measurement and Optimization: Restore tests provide valuable data on actual restore times, allowing organizations to measure RTOs and identify areas for optimization. Understanding RTOs helps in setting realistic recovery expectations and improving restore efficiency. Aim to minimize RTOs through efficient backup and restore technologies and well-practiced procedures.

- Training and Preparedness for Data Recovery: Restore testing serves as a valuable training exercise for IT teams and stakeholders involved in data recovery:

- Team Training and Skill Development: Restore testing provides hands-on training for IT staff, enhancing their skills and preparedness for data recovery scenarios. Training ensures the team is proficient in executing restore procedures and troubleshooting issues.

- Role Assignment and Responsibility Clarification: Testing helps clarify roles and responsibilities within the IT team for data recovery operations. Clear roles and responsibilities streamline the recovery process and improve coordination.

- Disaster Recovery Plan (DRP) Drills and Simulations: Regular restore tests can be integrated into Disaster Recovery Plan (DRP) drills and simulations to simulate real outage scenarios and test team response, communication protocols, and recovery workflows. DRP drills identify weaknesses in the recovery plan and improve overall preparedness.

Make restore testing a routine and integral part of your backup strategy. Schedule restore tests regularly, such as monthly or quarterly, to ensure backups remain reliable and recovery processes are effective. It is far better to identify and resolve issues during a planned test than to encounter them during a real data crisis. Industry best practices, as highlighted by resources like Fujitsu's research on data backup and recovery, emphasize the critical role of regular restore testing in ensuring data recovery readiness.

Simulating a Data Loss Scenario: A Step-by-Step Guide for Restore Testing

To conduct effective restore testing, it is essential to simulate a data loss scenario in a controlled, non-production environment. This approach ensures that testing does not disrupt live operations and provides a realistic assessment of recovery capabilities. Here is a step-by-step guide for simulating a data loss scenario and testing your restore process:

- Prepare a Dedicated Testing Environment:

- Staging Server or Isolated Network: Use a staging server, virtual machines, or an isolated network segment that replicates your production environment's hardware, software, and configurations. This ensures tests are conducted in a realistic setting without impacting live systems.

- Representative Data Set: Populate the testing environment with a representative subset of your production data. Use anonymized or sample data to protect sensitive information while still providing a realistic test scenario.

- Recent Backup Copy: Ensure the testing environment has access to a recent backup copy that you intend to restore. Select a backup set that is representative of your typical backup schedule and data volume.

- Documented Test Plan: Develop a detailed test plan that outlines the test objectives, scope, procedures, data loss scenario, restore steps, verification criteria, and expected outcomes. A well-defined test plan ensures structured and consistent testing.

- Simulate Data Loss in the Testing Environment:

- Accidental File Deletion: Simulate accidental deletion of critical files or folders. For a website, this could involve deleting a specific folder of website files via command line or file manager.

- Database Corruption or Loss: Simulate database corruption or loss by dropping a test database table or corrupting database files.

- System Configuration Failure: Simulate system configuration failures by accidentally removing critical configuration files, mimicking configuration errors or system malfunctions.

- Document Data Loss Details: Meticulously document exactly what data was “lost,” the method of simulated loss, and the timestamp of the simulated data loss event.

- Initiate the Restore Process Using Documented Procedures:

- Follow Documented Restore Steps: Adhere to your documented restore procedure meticulously. This may involve using hosting control panels, command-line interfaces, cloud backup service consoles, or backup software interfaces.

- Record Restore Steps and Time: Carefully note the steps taken during the restore process, any challenges encountered, and the time taken to initiate the restore and complete the data recovery.

- Verify Data Restoration and Integrity:

- Data Presence Verification: Confirm that the “lost” data is indeed restored to the testing environment. Check for the presence of restored files, folders, databases, or system configurations.

- Data Integrity Checks: Perform data integrity checks to ensure that the restored data is consistent, accurate, and uncorrupted. For websites, this includes testing website functionality, database integrity, and file verification using checksums or hash tools.

- Performance Testing and RTO Measurement (Optional but Recommended):

- Website Performance Metrics: Measure key website performance metrics, such as Time to First Byte (TTFB), Page Load Time, and Transaction Response Times.

- RTO Calculation: Calculate the total RTO for the restore process, from initiating the restore to full data verification and system functionality. Compare the actual RTO with your organization's defined RTO targets.

- Document Findings, Refine Procedures, and Train Team:

- Detailed Test Report: Prepare a comprehensive test report that includes test objectives, scope, simulated data loss scenario details, restore steps, RTO measurement, data verification results, and recommendations for improvement.

- Identify Areas for Improvement: Analyze the test results to identify any weaknesses, inefficiencies, or gaps in your backup and restore strategy, procedures, or documentation.

- Refine Backup and Restore Procedures: Update and refine your backup and restore procedures, documentation, and training materials based on the test findings and recommendations.

- Team Training and Knowledge Sharing: Use the test results and lessons learned to train your IT team on data recovery best practices, refined procedures, and troubleshooting techniques.

By diligently conducting regular, simulated data loss and restore tests using this step-by-step approach, organizations can proactively strengthen their data backup strategy, validate recovery capabilities, minimize RTOs, and ensure they are fully prepared to effectively respond to real data emergencies. Consistent testing and refinement are essential for maintaining data resilience and business continuity.

Example Restore Testing Metrics: Measuring Recovery Performance

To objectively evaluate the effectiveness of restore testing, it is crucial to measure key performance indicators (KPIs) that reflect recovery speed, data integrity, and system performance post-restore. Here are example metrics from a fictional restore test conducted for "Example-Business-Website.com," illustrating how to quantify and analyze restore testing outcomes:

- Test Date: 2025-03-15

- Backup Type Tested: Full Backup (Cloud Backup)

- Scenario Simulated: Full Server Failure

- Restore Time (RTO): 1 hour 15 minutes

- Data Integrity Check: Passed (all files and database entries verified using checksums and manual validation)

- Website Performance Post-Restore:

- Time to First Byte (TTFB): Pre-Restore Average: 0.25 seconds, Post-Restore Average: 0.28 seconds (Acceptable, within normal variance)

- Page Load Time (Fully Loaded): Pre-Restore Average: 2.5 seconds, Post-Restore Average: 2.7 seconds (Acceptable, within normal variance)

- Conclusion: Restore process deemed successful based on test metrics. RTO achieved is within acceptable limits and aligns with business requirements. Website performance post-restore is within the expected range, with minor, transient fluctuations. Restore procedure documentation validated and team proficiency confirmed through testing.

By following these steps, you can build a secure, reliable, and well-organized data backup system. This approach minimizes gaps in data protection, ensures operations continue smoothly during unexpected events, and strengthens trust in your ability to manage data effectively. To maintain its effectiveness over time, you should:

- Monitor backup processes to identify issues early.

- Test backups routinely to confirm they work as intended.

- Update your strategy as technology, threats, or business needs change.

Proactively maintaining your backup systems keeps you prepared to handle issues and protect data accuracy. Define clear procedures to prevent data loss, allocate resources for regular testing, and adapt plans to align with evolving priorities. This method ensures readiness and reduces risks in a world where data reliability is non-negotiable.

2.4 Simulating a Data Loss Scenario: A Step-by-Step Guide for Restore Testing

To conduct effective restore testing, it is essential to simulate a data loss scenario in a controlled, non-production environment. This approach ensures that testing does not disrupt live operations and provides a realistic assessment of recovery capabilities. Here is a step-by-step guide for simulating a data loss scenario and testing your restore process:

Step 1: Prepare a Dedicated Testing Environment

Create an isolated testing environment that mirrors your production setup but is separate from your live systems. This environment should include:

- Staging Server or Isolated Network: Use a staging server, virtual machines, or an isolated network segment that replicates your production environment's hardware, software, and configurations. This ensures tests are conducted in a realistic setting without impacting live systems.

- Representative Data Set: Populate the testing environment with a representative subset of your production data. Use anonymized or sample data to protect sensitive information while still providing a realistic test scenario.

- Recent Backup Copy: Ensure the testing environment has access to a recent backup copy that you intend to restore. Select a backup set that is representative of your typical backup schedule and data volume.

- Documented Test Plan: Develop a detailed test plan that outlines the test objectives, scope, procedures, data loss scenario, restore steps, verification criteria, and expected outcomes. A well-defined test plan ensures structured and consistent testing.

Step 2: Simulate Data Loss in the Testing Environment

Introduce a controlled data loss scenario in the testing environment to simulate a real-world data loss incident. Examples of data loss scenarios include:

- Accidental File Deletion: Simulate accidental deletion of critical files or folders. For a website, this could involve deleting a specific folder of website files via command line or file manager.

- Database Corruption or Loss: Simulate database corruption or loss by dropping a test database table or corrupting database files.

- System Configuration Failure: Simulate system configuration failures by accidentally removing critical configuration files, mimicking configuration errors or system malfunctions.

- Document Data Loss Details: Meticulously document exactly what data was “lost,” the method of simulated loss, and the timestamp of the simulated data loss event.

Step 3: Initiate the Restore Process Using Documented Procedures

Execute the data restore process in the testing environment, strictly following your organization's documented restore procedures. This step involves:

- Follow Documented Restore Steps: Adhere to your documented restore procedure meticulously. This may involve using hosting control panels, command-line interfaces, cloud backup service consoles, or backup software interfaces.

- Record Restore Steps and Time: Carefully note the steps taken during the restore process, any challenges encountered, and the time taken to initiate the restore and complete the data recovery.

Step 4: Verify Data Restoration and Integrity

After the restore process is complete, rigorously verify that the “lost” data has been successfully restored and that data integrity is maintained. Verification steps include:

- Data Presence Verification: Confirm that the “lost” data is indeed restored to the testing environment. Check for the presence of restored files, folders, databases, or system configurations.

- Data Integrity Checks: Perform data integrity checks to ensure that the restored data is consistent, accurate, and uncorrupted. For websites, this includes:

- Website Functionality Testing: Browse key website pages, test forms, user logins, and check critical website functionalities to ensure they are working as expected post-restore.

- Database Integrity Validation: If applicable, perform database integrity checks to validate database records, data consistency, and transactional integrity. Run database queries and integrity checks to ensure data accuracy.

- File Verification and Checksums: Confirm the presence, size, and content of restored files. Use checksums or hash verification tools to compare restored files with known good copies or backup metadata to ensure file integrity.

- Document Verification Results: Document all verification steps and the results of data integrity checks. Record any discrepancies, errors, or issues encountered during the verification process.

Step 5: Performance Testing and RTO Measurement (Optional but Recommended)

Conduct performance testing to assess the impact of the restore process on system performance and measure the Recovery Time Objective (RTO). Performance testing includes:

- Website Performance Metrics: Measure key website performance metrics, such as:

- Time to First Byte (TTFB): Measure TTFB before and after the restore to detect any performance degradation in server response times.

- Page Load Time (Fully Loaded): Measure full page load times before and after the restore to assess the impact on user experience.

- Transaction Response Times: Measure response times for critical website transactions, such as form submissions or e-commerce checkout processes.

- RTO Calculation: Calculate the total RTO for the restore process, from initiating the restore to full data verification and system functionality. Compare the actual RTO with your organization's defined RTO targets.

Step 6: Document Findings, Refine Procedures, and Train Team

After completing the restore test, thoroughly document all findings, analyze the results, and refine your backup and restore processes based on the test outcomes. Key post-test activities include:

- Detailed Test Report: Prepare a comprehensive test report that includes:

- Test Objectives and Scope

- Simulated Data Loss Scenario Details

- Step-by-Step Restore Procedures Used

- Restore Time (RTO) Measurement

- Data Verification Results and Integrity Check Outcomes

- Performance Testing Metrics (if applicable)

- Challenges Encountered and Lessons Learned

- Recommendations for Improvement

- Identify Areas for Improvement: Analyze the test results to identify any weaknesses, inefficiencies, or gaps in your backup and restore strategy, procedures, or documentation. Focus on areas where RTOs can be reduced, restore procedures can be simplified, or data integrity can be enhanced.

- Refine Backup and Restore Procedures: Update and refine your backup and restore procedures, documentation, and training materials based on the test findings and recommendations. Ensure that restore procedures are clear, concise, and readily accessible to IT staff.

- Team Training and Knowledge Sharing: Use the test results and lessons learned to train your IT team on data recovery best practices, refined procedures, and troubleshooting techniques. Share test reports and findings with relevant stakeholders to improve overall data recovery preparedness.

By diligently conducting regular, simulated data loss and restore tests using this step-by-step approach, organizations can proactively strengthen their data backup strategy, validate recovery capabilities, minimize RTOs, and ensure they are fully prepared to effectively respond to real data emergencies. Consistent testing and refinement are essential for maintaining data resilience and business continuity.

3. Data Recovery: Retrieving Lost Information

Data recovery is the process of restoring data that has been lost, corrupted, or become inaccessible. It’s a critical capability that complements data backup, providing the means to retrieve information when data loss incidents occur.

Data recovery is your plan B when data loss happens despite your best efforts in prevention and backup. It’s about having the tools and processes to retrieve valuable information, whether from backup media or directly from damaged storage devices. Effective data recovery minimizes downtime and data loss impact.

- Reactive Process: Data recovery is typically a reactive process, initiated after a data loss event has occurred.

- Range of Scenarios: It addresses various data loss scenarios, from simple file deletions to complex system failures.

- Essential for Business Continuity: Crucial for maintaining business operations and minimizing the impact of data loss on productivity and reputation.

3.1. How Data Recovery Works

Data recovery processes vary depending on the nature and extent of data loss. Understanding these processes is key to effective recovery planning and execution.

The approach to data recovery depends heavily on the cause and severity of data loss. Here’s a look at the typical processes involved:

Assessment of Data Loss:

- Identify the Cause: Determine what caused the data loss (e.g., hardware failure, software corruption, accidental deletion, virus attack).

- Evaluate Extent of Loss: Assess the scope of data loss – is it a single file, a directory, a database, or an entire system?

- Device Condition: Check the condition of the storage device (if applicable). Is it physically damaged, logically corrupted, or functioning normally?

Recovery Method Selection:

- Restore from Backup: If backups are available and up-to-date, restoration from backup is the primary and most efficient method.

- Software-Based Recovery: Use data recovery software to scan storage devices and recover deleted or lost files. Effective for logical failures and accidental deletions.

- Professional Data Recovery Services: For physical damage or complex logical failures, professional services with specialized tools and expertise may be necessary.

Data Recovery Process Execution:

- Backup Restoration: Follow established procedures to restore data from backup media. Verify data integrity post-restore.

- Software Recovery:

- Scanning: Data recovery software scans the storage device to locate recoverable data.

- Data Extraction: Recoverable files are extracted and saved to a safe location (different from the source device to prevent overwriting).

- File Repair: Some software can repair corrupted files during recovery.

- Professional Services:

- Clean Room Environment: Physical repairs of damaged drives are often performed in a clean room to prevent further contamination.

- Advanced Techniques: Professionals use specialized hardware and software tools and techniques for complex data recovery scenarios.

Data Verification and Validation:

- Check Data Integrity: Verify that recovered data is complete, uncorrupted, and functional.

- Functionality Testing: Test recovered applications and databases to ensure they are working correctly.

- User Verification: Have users validate recovered data, especially for critical business information.

Post-Recovery Actions:

- Root Cause Analysis: Investigate the cause of data loss to prevent future incidents.

- Improve Prevention Measures: Implement measures to mitigate identified risks and enhance data protection strategies.

- Update Documentation: Update data recovery procedures and documentation based on lessons learned from the incident.

Effective data recovery requires a systematic approach, the right tools, and a clear understanding of data loss scenarios. It’s a critical process for minimizing data loss impact and ensuring business resilience.

3.2. Common Data Loss Scenarios

Understanding common causes of data loss helps in preparing effective data recovery strategies and preventive measures.

Data loss can occur due to a variety of reasons, broadly categorized into physical, logical, and human-induced causes:

Hardware Failure:

- Hard Drive Failures: Mechanical failures, electronic component damage, wear and tear leading to drive crashes.

- SSD Failures: NAND flash wear, controller failures, power surges causing SSD breakdowns.

- RAID Array Failures: Multiple drive failures in a RAID array leading to data inaccessibility.

- Server and System Failures: Failures in servers, motherboards, power supplies, or other critical hardware components.

Software Corruption:

- File System Corruption: Errors in the file system structure due to power outages, software bugs, or improper shutdowns.

- Database Corruption: Database errors, transaction failures, or software bugs leading to database corruption.

- Application Errors: Bugs or conflicts in applications causing data corruption or inaccessibility.

- Operating System Errors: OS crashes, updates gone wrong, or system file corruption leading to data loss.

Human Error:

- Accidental Deletion: Unintentionally deleting files, folders, or databases.

- Formatting Errors: Mistakenly formatting drives or partitions containing data.

- Overwriting Data: Accidentally overwriting files or backups with incorrect or outdated information.

- Misconfiguration: Improperly configured systems or storage leading to data loss or inaccessibility.

Cyberattacks and Malware:

- Ransomware Attacks: Malware encrypting data and demanding ransom for its release, effectively making data inaccessible.

- Virus and Malware Infections: Viruses or malware corrupting or deleting files and system data.

- Hacking and Unauthorized Access: Malicious actors gaining unauthorized access and deleting or corrupting data.

Natural Disasters and Environmental Factors:

- Floods and Water Damage: Water damage to hardware and storage devices in floods or leaks.

- Fires: Fire damage destroying hardware and backup media.

- Power Outages and Surges: Power fluctuations causing hardware failures or data corruption.

- Extreme Temperatures: Overheating or extreme cold leading to hardware malfunction and data loss.

- Earthquakes and Physical Damage: Physical shocks and structural damage causing data loss.

Power Issues:

- Power Surges: Sudden spikes in electrical power damaging electronic components of storage devices and systems.